Organizations are drowning in data, yet often struggle to turn it into meaningful action. This series has explored navigating the data landscape - how to observe, orient, and decide. In this final installment, we tackle the crucial "Act" stage of the OODA loop, providing practical strategies to translate insights into impactful initiatives. It's time to close the loop and complete the cycle, turning data into doing.

Understanding the landscape

In any organization, different stakeholders have unique data needs. A recruiter focuses on candidate pipelines, while a department head cares about business outcomes like employee retention. Misunderstandings can easily arise if everyone isn't looking at the data through the same lens.

You may be familiar with the activity of Chinese whispering? It is a situation where a piece of information is passed from one person to the next and each time it is changed slightly. There is an interesting story about the differences in how two people with different focuses can shape their different points of view. Two automotive engineers argued for half an hour about the angle of an engine mount - one insisted it was 40 degrees, the other 50. Their disagreement escalated until a manager stepped in and asked for their reference point. "Vertical!" one exclaimed. "Horizontal!" said the other. They were both right, just using different frames of reference.1 Their lack of a common perspective created unnecessary conflict.

That's why role-specific dashboards are so crucial. They provide tailored views of the data, ensuring that each stakeholder has the information they need to make informed decisions without the risk of misinterpretations. The core challenge is tool integration. Individual tools excel in specific functions (ATS, BI, visualization) but rarely offer a complete solution.

Stakeholder needs and required data granularity vary widely, making one-size-fits-all dashboards impractical. Organizations resort to time-consuming, error-prone workarounds, increasing costs and reducing efficiency. Then again, switching platforms or integrating multiple tools adds complexity. Even robust tools like LinkedIn Recruiter or Stepstone are limited without full integration. Remember the old software engineering adage that there are no permanent solutions, only the trade-offs. You can't have everything be fast, good, and cheap.

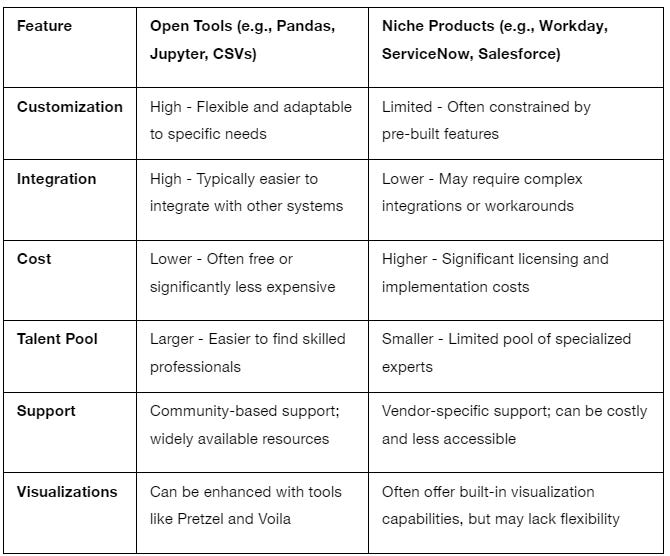

The choice of tools depends on your needs and resources. Open formats offer flexibility and cost savings, but require technical expertise and restrict data access. Niche products offer specialized functionality and vendor support, but can be expensive and less customizable. For legacy data, open formats are often more practical and cost-effective because of interoperability and customization. But that's not enough. Balance domain expertise with skills. Even flexible tools (Python, Tableau) require training time that leadership often lacks. Thoughtfully plan your data architecture and user interface. Niche products (Collibra, Workday) have smaller talent pools, making it harder to find specialists.

Having been at the same company for a number of years, I've observed a recurring pattern. Newly arrived executives often introduce their preferred tools and metrics without integrating them with existing systems. This disrupts continuity, especially when tracking long-term trends with anonymized data. Each time a new leader introduces different tools and metrics, the team must spend time and resources to adapt, often losing valuable historical data in the process. This creates a cycle of inefficiency. By the time the team adapts, the director may have moved on, repeating the cycle with his or her successor. Otherwise, you risk repeating history without truly learning from it.

A stable hiring analytics framework with consistent metrics is essential for reliable, actionable insights. Especially for anonymized and aggregated data. While it is impossible to anticipate every question, well-organized and validated data can address recurring inquiries and provide deeper insights. Shifting from reactive transactions to relationship building and coaching fosters a more adaptive and resilient hiring process. Iterative rather than linear progress often sparks unexpected innovation. Readily available, refined data supports proactive decisions and answers common questions.

Thinking in terms of system relationships

Traditional performance management often relies on past data - a "rearview mirror" approach. This works well for linear projections, but what if the road ahead is full of unexpected twists and turns? Systems thinking offers a more powerful alternative, encouraging us to view performance as interconnected elements, where actions have ripple effects. Consider onboarding new recruiters. Metrics focused solely on immediate productivity miss the bigger picture. Integration takes time - a circular process of learning and adaptation.

As organizations scale, workloads increase, putting stress on the system. This can create a vicious cycle: high stress lowers productivity, which further increases stress. This reinforcing cycle, often unseen until it is too late, must be identified and managed early. Daniel H. Kim observed: “(...) extra pressure may generate more stressful events, which will accumulate into increasing levels of stress. High stress levels will then lead to lower productivity, which further reduces work capacity and leads to more stressful events. This reinforcing loop of accumulating stress is intangible, yet all too real for many people.”2

By visualizing these relationships, such as through a flowchart that shows stress accumulators and their resource allocations, managers can better identify pressure points and more effectively reallocate tasks and resources. Such diagrams can also show how seemingly isolated actions can have far-reaching effects across the organization, helping leaders make more informed decisions that consider the whole system, not just its parts.

Now let's apply systems thinking to sales. Think of your sales team as a system, much like a dairy farm (!). The diagram below, adapted from a milk production model, illustrates the dynamics of sales growth. It shows the flow of resources and their impact on overall sales performance. Think of it as a simplified visual representation of how your sales team grows and generates revenue. “The same generic resource development structure underlies both models. Although we may debate whether it takes longer to produce a milk cow or a sales manager, we can both agree that the structure of both processes is fundamentally the same.”3

Here's how to interpret the elements:

Hires (Births): New salespeople entering the system.

Trainees (Calves): New hires developing their skills.

Training (Maturation): Time and resources invested in training. The rate reflects how quickly trainees become productive.

Sales Managers (Milk Cows): Productive team members generating revenue.

Annual Sales (Annual Milk Production): Total sales revenue - the system's output.

Sales per Manager (Milk per Cow): Individual productivity.

Attrition (Deaths/Quits): Salespeople leaving the team.

Arrows depict flow; loops show feedback mechanisms (e.g., how training impacts the number of productive managers). This visualization helps identify key performance drivers: hiring, training, and attrition.

By visualizing sales growth in this way, you can identify key factors that influence performance, such as hiring rates, training effectiveness, and attrition. This system's perspective helps you understand the interdependencies within your organization and make more informed decisions to improve overall results. Just as a farmer manages the various elements of their dairy farm to maximize milk production, you can manage the different aspects of your team to drive sales growth.

Getting to know the front lines

My former manager was a big proponent of data literacy. He emphasized that a forecast is not the plan itself. "You can't change the forecast just because you're off track," he would say. He compared it to planning a road trip. Imagine driving a camper across Spain. Your route (the plan) might change because of detours or weather. But your estimated time of arrival (the forecast) remains the same-a fixed benchmark against which to measure your actual progress. You can still assess how you're doing relative to the original forecast. The plan is adaptive; the forecast is the benchmark. It informs your decisions, telling you when to push harder or when to ease off. In recruiting, it's the same: your sourcing strategies and interviewing process (the plan) may evolve, but your initial hiring projections (the forecast) help you gauge your overall effectiveness.

How often does this happen in your organization, where the expectations of higher management are far removed from the reality of the hands-on specialists on the front lines? In “Communicating with data” the author observes that: “Even if your stakeholder has spoken to the end user, the requirements will be second- or third-hand. Each link in the chain will add their own interpretation and needs into the requirements, further convoluting the clarity around what is essential.”4

When designing KPIs, remember that the map is not the territory. Metrics should reflect the reality of the work, not an idealized version. Involving those who do the work is essential. It ensures that metrics are relevant and fosters ownership, which increases motivation. While shadowing isn't always feasible, it's the best way to identify bottlenecks and optimize workflows.

The authors of "Agile HR" suggest "Gemba walks" - observing how value is created: “A Gemba walk (a Japanese term for stepping back from your daily tasks to observe how value is created) is a great way to learn from others and gain different perspectives on how to approach various Agile activities. Go and observe Agile teams in action. Ask to attend their stand-ups, customer reviews and retrospectives as a silent observer and then discuss insights.”5

Another approach, Kaizen, encourages continuous improvement through front-line suggestions. Even small changes can make a big difference. As Robert Maurer recounts in "The Spirit of Kaizen," a Toyota manager explained that even bad suggestions are valuable: These are very important to us because they tell us our training of this employee has been ineffective and we can now help this person to improve.”6 Don't dismiss ideas before careful consideration. The A3 problem-solving method, also from Toyota, further emphasizes learning from mistakes and documenting problems, outcomes, and proposed changes. (See "Understanding A3 Thinking" for more7).

Incorporating "Mission Command" into hiring analytics can transform performance measurement and team management. Mission Command combines centralized intent with decentralized execution, allowing freedom and speed of action within defined constraints. As military historian Stephan Bungay states in “The Art of Action”: “Don’t tell people what to do and how to do it. Instead, be as clear as you can about your intentions. Say what you want people to achieve and, above all, tell them why. Then ask them to tell you what they are going to do as a result.”8

In recruiting analytics, this means clearly communicating the why behind your KPIs while giving teams the autonomy to achieve them as they see fit. Here are some examples:

Instead of: "Reduce time-to-fill by 10%."

Mission Command: "We need to reduce time-to-fill to improve candidate experience and ensure we're not losing top talent to competitors. How can we achieve a 10% reduction?"

Instead of: "Increase the number of qualified candidates in the pipeline."

Mission Command: "We need a stronger pipeline of qualified candidates to ensure we have a diverse pool of talent for each role. How can we improve our sourcing strategies to achieve this?"

Instead of: "Improve the quality of hire."

Mission Command: "We need to ensure we're hiring candidates who are not only skilled but also a good cultural fit, contributing to long-term retention and team success. How can we refine our assessment process to achieve this?"

When team members understand the intent behind the metrics, they can take proactive steps and make decisions that align with organizational goals, fostering agility and innovation. This approach improves performance by encouraging active contribution, not just passive compliance.

Mission Command also emphasizes trust and empowerment. When team members know the "why" and are trusted with the "how," they become more engaged and motivated. This leads to faster responses and more effective problem solving. In recruiting, this translates into dynamic, responsive hiring processes where recruiters and managers make the best decisions for each situation, significantly increasing the effectiveness of recruiting strategies.

And there's plenty of evidence that ideas should come from the bottom up, not just when it comes to measuring performance. Daniel Levitin, in "Organized Mind," argues that employees who work in the same place but for different employers can have different levels of productivity. “In 1982, GM closed the Fremont plant. Within a few months, Toyota began a partnership with GM and reopened the plant, hiring back 90% of the original employees. The Toyota management method was built around the idea that, if only given the chance, workers wanted to take pride in their work, and wanted to see how their work fit into the larger picture.”9 The result was reduced absenteeism and increased engagement. The key difference was the attitude of the managers toward the workers. Building team spirit often proves more effective than offering bonuses to motivate workers.

Tracking the meaningful work

In her very practical book, Making Work Visible, Dominica DeGrandis emphasizes that you can't improve what you can't see. So we can identify five "thieves of time" that kill productivity:

Too much work in progress (WIP): Partially completed work clogging the system.

Unknown dependencies: Unforeseen roadblocks delaying completion.

Unplanned work: Interruptions disrupting focused work.

Conflicting priorities: Competing projects hindering clear focus.

Neglected work: Abandoned tasks draining resources.10

Familiar from Agile, Kanban boards promote transparency, limit WIP, improve workflow, and aid in prioritization. The resulting visibility allows for data-driven adjustments. As you can see below, swim lanes provide an overview of tasks that are owned by teams, not individuals.

Individual swimlanes on Kanban boards can create problems: individual-centric stand-ups, perceived unequal effort, limited skill diversification, and reduced collaboration. Instead, focus on the work, not the person. This empowers team members to work outside of their assigned roles and contribute to the overall success. Focusing on individual performance discourages collaboration and potentially reduces business value. As the author claims, “Making work visible is one of the most fundamental things we can do to improve our work because the human brain is designed to find meaningful patterns and structures in what is perceived through vision.”11

Beyond Kanban, it is also tempting to bring some other insights from the world of product management. OKRs (Objectives and Key Results) provide a framework for goal setting that aligns well with Mission Command. They help translate strategic intent into measurable actions, which is critical for the "Act" phase of the OODA loop. While we've discussed OKRs in previous articles, it's important to reiterate their value in driving action.

In recruiting, as Erik Van Vulpen mentions in "The Basic Principles of People Analytics," focusing on frequent and reliable metrics can lead to better insights.12 For example, instead of just tracking hires, consider metrics such as the number of qualified candidates sourced or the number of interviews conducted. By reducing the influence of randomness and focusing on more granular data, organizations can gain a clearer understanding of their recruiting effectiveness and make more informed decisions. This approach aligns with the principles of mission command and OKRs, which emphasize clear intent, decentralized execution, and continuous improvement.

A common challenge is balancing the need for focus (narrowing down to a few key KPIs) with the need for a comprehensive view. A KPI hierarchy helps address this by showing how individual metrics contribute to broader goals.

Itamar Gilad, a veteran product manager at Google, cautions in "OKRs Done Right," that even well-intentioned OKRs can be misused. They are simply containers for goals, and bad goals are amplified just as easily as good ones. “In fact, of all the management tools, OKR is the easiest to misuse, overuse and abuse - many companies fall into this trap. This is a major problem because bad use of OKR can amplify the issues the org is troubled with rather than fix them.”13

Focus on measuring impact and outcomes (e.g., user activation rates), not just outputs (e.g., launch of a feature). It's also important to keep performance reviews separate from OKRs. Linking them encourages minimal ambition and "gaming the system. OKRs should inspire bold goals; evaluations shouldn't stifle them.

The chart below14 may look complicated because on the one hand we should limit ourselves to 1-3 KPIs. On the other hand, they may not be enough. Fortunately, we can arrange them in a hierarchy to see which ones are part of what. Whether it is understanding product growth or team performance, such a hierarchy can be used to outline points of interest for different stakeholders.

Creating a dashboard

As mentioned earlier, a single dashboard that serves multiple stakeholders - such as a manager monitoring tool usage, another monitoring response rates, and a team leader assessing the quality ratio of prospects - requires carefully differentiated settings to meet their different needs without clutter or confusion. Each stakeholder's view should be tailored to provide the most relevant data for their specific role, ensuring that the dashboard is not only comprehensive, but also concise and clear. This was the feedback I received from my stakeholders when I tried to use multiple datasets that required a lot of calculations under the same hood.

Encourage continuous improvement through iterative dashboard design. It is better to start from scratch and be able to incorporate user feedback as you move forward. This avoids making costly assumptions when developing a full product in one go. Creating a dashboard that can flexibly serve these diverse interests without clutter or compromise requires thoughtful planning of data architecture and user interface design.

Usability is paramount. As Steve Krug advises in "Don't Make Me Think, Revisited": “Resist the impulse to add things. When it’s obvious in testing that users aren’t getting something, the team’s first reaction is usually to add something, like an explanation or some instructions. But very often the right solution is to take something (or some things) away that are obscuring the meaning, rather than adding yet another distraction”.15 Simplicity often requires effort. Behind elegant metrics may lie complex calculations. Handle these behind the scenes (separate spreadsheets, hidden tables) to maintain dashboard clarity and actionability.

When designing dashboards, include goals and thresholds for key metrics. Visual cues - such as color coding (red for underperforming metrics) - enable quick assessment of performance. This makes the dashboard a powerful, intuitive decision-making tool.ServiceNow's "Build a Performance Management Approach" offers helpful guidance:

❑ Do you have KPIs defined that clearly indicate when you have fulfilled an expected business outcome?

❑ Do all KPIs have targets defined that stakeholders agree with?

❑ Have stakeholders defined thresholds to inform when an action is required?16

Now you can pick three KPIs and for each:

Focus on the biggest expected wins (like better processes or lower costs). Be super clear about what you're measuring. Make sure everyone agrees these KPIs show success and everyone measures them the same way.

Set a starting point to compare future results. If you have past data, great. If not, work with people who know the processes to get a reasonable estimate. You don't need a perfect baseline, just one that makes sense to everyone.

Find early signs of progress. Work with process owners to spot short-term milestones that show you're on track. These should help you adjust if needed. For example, if your KPI is faster problem-solving, track how many times someone touches a problem and how long each touch takes. This helps you see where to improve.

You can also listen to some of my insights shared during the Brainfood Live On Air:

The journey begins

As the old saying goes, "When you go to sea, take one chronometer or three, but never two.” This wisdom from the age of celestial navigation holds a valuable lesson for data-driven decision making. One reliable chronometer, or three for cross-referencing, ensures accurate navigation. Two conflicting chronometers lead to confusion and potential disaster. Similarly, in business, rely on a single, trusted data source or use multiple sources that can be cross-referenced to validate data integrity.

Our journey through the data jungle is not a one-off expedition; it's an ongoing process of exploration, adaptation and action. Throughout this series, we've explored how to observe, orient, decide and finally act within the data landscape. We've learned how to identify key metrics, visualize system dynamics, and give teams the autonomy and context they need to make informed decisions. We've also emphasized the importance of clear communication, stakeholder alignment and continuous improvement.

Data is the map, but you are the navigator. The human element - your experience, communication skills, and ability to assess cultural fit - remains critical. Data informs, but it doesn't replace judgment.

Now it's time to put these principles into action. Review your data regularly, analyze trends, and adjust your course as needed. Foster a culture of learning and collaboration where data empowers, not dictates. By adopting these strategies, you can navigate the complexities of the data jungle and set a course for lasting success.

-- Michael Talarek

Daniel H. Kim "System Thinking Tools: A User's Reference Guide"

Daniel H. Kim "System Thinking Tools: A User's Reference Guide"

Carl Allchin, “Communicating with Data: Making Your Case with Data”

Natal Dank and Riina Hellström, “Agile HR: Deliver Value in a Changing World of Work”

Robert Maurer, "The Spirit of Kaizen"

Durward K. Sobek, Art Smalley “Understanding A3 thinking: a critical component of Toyota's PDCA management system”

Stephan Bungay, “The Art of Action”

Daniel Levitin "Organized Mind

Dominica DeGrandis " “Making Work Visible: Exposing Time Theft to Optimize Work & Flow”

Dominica DeGrandis " “Making Work Visible: Exposing Time Theft to Optimize Work & Flow”

Eric Van Vulpen, “The Basic principles of People Analytics” | https://www.aihr.com/resources/The_Basic_principles_of_People_Analytics.pdf

Itamar Gilad, “OKRs Done Right” | https://itamargilad.com/wp-content/uploads/2022/01/Ebook-OKRs-Done-Right-Itamar-Gilad.pdf

based on example from https://itamargilad.com/the-three-true-north-metrics-that-your-product-and-business-need/

Steve Krug, “Don't Make Me Think. Revisited A Common Sense Approach to Web Usability”