In the ever-expanding realm of data, information can resemble a dense and complex jungle. Countless numbers lie before us like a vast, unexplored wilderness. While seemingly endless metrics surround us, the ability to critically analyze them remains paramount. This article explores the core principles of effective data analysis, emphasizing the need to go beyond isolated numbers and cultivate a nuanced understanding of the broader context. We'll look at concepts inspired by the natural world and how shifting perspectives can reveal hidden patterns.

By adopting a "bird's eye view" and a "ground level" perspective, akin to a bird surveying the land and a worm tunneling through the soil, we gain a more complete understanding of the data landscape. We'll learn to distinguish between individual data points and the overarching patterns, just as we distinguish individual trees from a thriving forest. We'll also discover how seemingly unrelated data points, like constellations of individual stars, can reveal hidden insights.

We'll also explore the concept of hidden depths, akin to an iceberg with only a fraction visible above the surface, requiring the consideration of leading indicators alongside lagging ones, as if anticipating sunrise after sunset. Finally, we'll discuss the importance of avoiding a "blinkered" approach, ensuring that we don't rely solely on data without acknowledging potential biases and limitations. By integrating data insights with human judgment and a holistic understanding of the issue, we can unlock the true potential of data for informed and impactful decision making.

From the view of a bird to a worm

Being educated in the social sciences, I've traditionally focused on qualitative analysis. While the numbers have value, by themselves they can feel cold and impersonal. Is a score of 4 good or bad? It all depends on the context. My initial focus on raw data without sufficient explanation sometimes led stakeholders to question its validity. The data itself was sound; the problem was presenting the insights clearly. This experience reinforces the critical role of clear communication in translating data into actionable insights.

Numbers are just a vessel for whatever meaning you give them. And if you know how to work with them, they can be powerful. But that's all they're there for: to quantify and make the decision-making process more transparent. With the abundance of data, dashboards, and the ease of transformation, it's easy to get lost in understanding what's most important. Studies by Daniel Kahneman and others have shown that framing, or how information is presented, can have a significant impact on decision making. For example, people may perceive a product differently depending on whether it's described as "60% fat" or "40% fat-free".

Data analysis thrives on context. It's not just about the numbers, but the story they tell. As discussed in "Communicating with Data," even seemingly simple questions like "What happened to our profits?" require a deeper understanding. Global economic trends, an organization's stage of growth, and even when the question was last asked all play a role in shaping the answer.

Questioning techniques explored in “The Art of Data Science: A Guide for Anyone Who Works with Data” can be particularly useful. The type of question you ask about your data is critical to interpreting the results correctly. There are several types:

descriptive (think, "What's going on with this data?"),

exploratory (looking for patterns),

inferential (testing those patterns on a larger group),

predictive (who will do this thing in the future?),

causal (why does this thing happen?),

and mechanistic (how does this thing work?)

For example, an exploratory question might examine dietary patterns, while a predictive question might ask who is likely to adopt a healthy diet in the future. Understanding these types of questions will help you avoid getting the wrong idea from your data analysis.

Stakeholder motivations also matter. Someone under pressure to meet targets might seek a deep dive into performance metrics to identify areas for improvement. Conversely, high performers may want validation of their success through data-driven analysis. Without context, data can paint an incomplete picture.

Tim Harford, author of "The Data Detective," stresses the importance of considering multiple perspectives: "both the worm's-eye view and the bird's-eye view". The close-up view (like a worm looking around) and the big picture (like a bird soaring high). These different perspectives will give you different results, and sometimes they may even contradict each other. This should be the beginning of any investigation. This is precisely why a simple question like "What do you want to do with these numbers?" can unlock the "why" behind the data request, leading to a more insightful and actionable analysis.

Seeing sheep in the field

When setting up a system to effectively collect and display KPIs, precision in the initial configuration is critical. Common pitfalls include ensuring data is current, consistency in how data is collected and reported, and clear labeling to avoid user confusion. Unfortunately, the perfect data set is rare.

Context is key. Author of “Communicating with Data” has emphasized: “If the reporting is not updated, the reports will quickly be ignored, and other “uncontrolled” methods of reporting will arise. By not receiving the analytics needed, cottage industries will begin to pop up across the organization to form the reporting. All of the hard work on setting up strategic data sources is eradicated, as people will cobble together the piece they need.” But this is not enough.

You can also watch fuller explanation in the video above from my internal team presentation

Consider a seemingly simple question: How many sheep are there in this field? At first glance, the task of counting sheep in a field - whether it's one or two - seems straightforward. But look closer and things get interesting. Is there a pregnant ewe about to give birth? A young lamb by her side? The answer, like data analysis, depends on how we define and filter what we count. Is the lamb considered a separate sheep, or is the pregnant ewe counted as more than one sheep because of her unborn offspring?

This mirrors data analysis where the filters applied - such as counting only adult sheep or including lambs and unborn lambs - can dramatically change the results. Are we tracking total flock size or breeding potential? Just as in the field, where the purpose of the count dictates the counting method, the objectives behind the data collection significantly influence the interpretation of the data. This story about counting sheep (credited to Michael Blastland, co-creator of "More or Less, The things we fail to see") is an important reminder to consider the "why" behind data collection and what filters may be necessary to get a clear and accurate picture.

See the forest from the trees

Data comes in different flavors-raw or aggregated. Imagine a huge forest. Raw data is like examining each individual tree-detailed but overwhelming. Aggregates are like stepping back and looking at the overall composition of the forest-easier to understand but lacking in detail. When you encounter a table, ask yourself: is this the summary or the raw data? Knowing this helps you formulate questions. Raw data invites exploration-what patterns emerge, and does it tell the whole story? Summaries, on the other hand, sacrifice detail for a broader view: we can see trends but miss nuances.

Understanding metric calculations is also critical. Does the "monthly average" include all employees, or just those who are actively working (not on vacation)? Filters are essential for narrowing the data focus. Analysts define what to include and what to exclude with laser focus, ensuring that they're comparing oranges to oranges, not apples to apples.

But even the most carefully collected data isn't immune to errors. These errors can occur during collection, entry, processing, or transmission. Typos, duplicates, and mislabeling are just a few common suspects. These errors can corrupt conclusions, so analysts fight back with data validation tools, cleaning techniques, and error checking algorithms. Excel spreadsheets, as versatile as they are for different purposes, can introduce problems that are hard to spot: overwriting a formula with a fixed value.

But there are more problems with dirty data. Missing records for critical information can occur due to human error or technical glitches. If left unaddressed, this can lead to biased results. To mitigate this problem, analysts can "impute" missing values based on existing data, use models for estimation, or remove problematic data points altogether. Often, the outliers add more noise than signal anyway.

By systematically addressing both missing data and errors, we must ensure the integrity and reliability of the results. It's like meticulous housekeeping - essential to ensuring that the data reflects reality, not a distorted version of it. This approach ultimately leads to more accurate and actionable insights.

Before drawing conclusions about performance, it's important to look beyond the results themselves. We must also consider the validity of the data used to measure that performance. Professor Haas of Wharton University offers a valuable checklist for identifying strong performance measures:

Level of analysis: Who are we measuring? Individuals, teams, or the entire organization?

Reliability: Are the assessments consistent? Do they produce the same results over time and across raters?

Validity: Do the assessments accurately measure what they're supposed to? Are we capturing the true essence of performance?

Comparability: Can the data be consistently measured and meaningfully applied to all units within the data set?

Comprehensiveness: Is the data available for most or all entities within the data set? Limited availability may skew results.

Cost-effectiveness: Is the data collection process cost effective? Are there more efficient ways to collect the information?

Causality: Can the data be used to establish a defensible causal relationship between actions and outcomes?

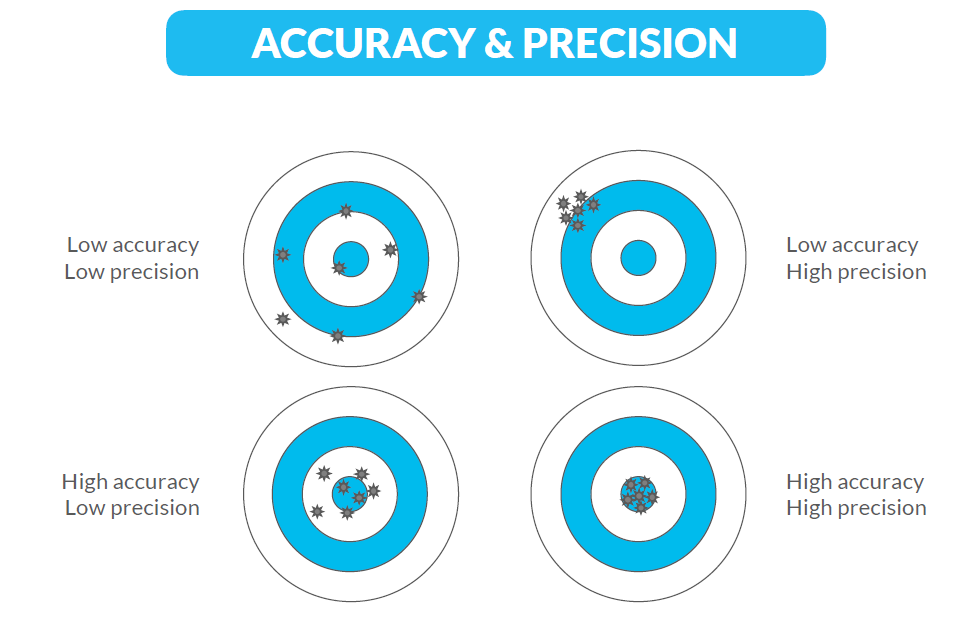

Ideally, you want both high precision (reliability) and high accuracy (validity). This means that your measurements are clustered together and close to the true value. Let's take a look at how precision and accuracy differ. Accuracy refers to how close a measurement is to the true value. Precision refers to how close multiple measurements are to each other, regardless of how close they are to the true value. Imagine you're throwing darts at a dartboard. Accuracy is how close your darts land to the target. Precision is how close your darts are grouped together, even if they're not near the bullseye. Erik Van Vulpen has prepared the following visual explanation. For example, the circles labeled "High Precision" have the dots grouped tightly together, even though (along with "Low Accuracy") they may be far from the target.

Relating the stars to the constellations

Data breeds data: the more information we collect, the more data we create. Raw numbers are like individual stars in the night sky. Each star is a piece of data, but it doesn't tell the whole story. Relative numbers are like constellations. By connecting the stars (data points) in a particular way, you can see patterns and shapes (comparisons and trends) that you wouldn't see by looking at individual stars alone.

The ever-increasing volume of data makes it necessary to track not only raw numbers (unadjusted metrics), but also relative numbers. As mentioned earlier, a number by itself means little. It takes on meaning when compared to a goal, a similar record from another time period, or a different category. For example, total monthly sales or new customer acquisitions are raw numbers. Sales growth over the previous month or the number of new customers as a percentage of the total potential market are relative numbers.

Understanding the difference between these two types of metrics allows you to display relationships between numbers in a variety of ways. You can divide them to show ratios or percentages, multiply them using weights, or subtract them to show differences. Consistency in calculations is critical to avoid the need to constantly review the underlying data.

Once you put things in context, you might ask - is this good or bad? Using both absolute and relative measures allows you to fairly evaluate performance from different starting points. For example, a small, new team or product may not be able to match the high volumes or revenues of established counterparts in terms of raw numbers. Here, relative metrics can highlight their growth or efficiency improvements, demonstrating significant progress from their specific baselines.

But relying on a single metric can be misleading. Imagine a recruiter who is ecstatic about a 70% response rate to his InMail outreach on LinkedIn. At first, it seems like a big win. But upon closer inspection, they discover that many of the responses are from unqualified candidates. This is a classic example of the pitfalls of relying solely on response rates, which can fail to capture the true quality of the talent pool.

Even seemingly impressive response rates can be deceiving. A high cumulative response rate achieved through multiple attempts may not reflect the effectiveness of the initial outreach. For example, a software company might boast a 90% response rate for its email marketing product, but that number could be misleading if it represents responses after multiple follow-up messages. Similarly, my own efforts to reach female tech talent resulted in a 24% response rate, but only after sending three unique messages. A single outreach attempt yielded a much lower response rate of only 11%. This highlights the importance of considering the context behind response rates.

Consider another talent acquisition example: job boards can be a valuable recruiting tool. However, to ensure optimal results, we need to analyze which roles, such as sales or marketing positions (commercial roles) versus engineering positions (technical roles), are most successfully filled through specific platforms.

Understanding job board performance goes beyond simply counting the number of hires. We also want to assess cost effectiveness. To do this, we can calculate the total cost per hire for each platform by dividing the platform's annual cost by the number of hires it generates. This allows us to compare different job boards.

A more sophisticated method is to calculate the "effort per hire" for each platform. This metric is derived by dividing the number of applicants by the number of hires. A lower ratio indicates a more efficient channel. For example, if you need to interview 170 applicants from a particular job board to fill a single position (170:1 ratio), this suggests a less efficient source than a board that provides an applicant-to-hire ratio of 17:1.

After all, there are numerous ways to analyze data for optimal job board selection. Here are some key metrics to consider for each channel:

Percentage of Applicants: What percentage of total applicants come from this channel?

Percentage of Hires: What percentage of total hires came from this channel?

Application-to-Hire Ratio: How many applicants from this channel typically convert to hires?

Effort for 1 hire: How many applicants from this channel are necessary to make a single hire?

Deceived by the clouds

Humans are wired to look for patterns, even in random occurrences, like shapes in clouds. This has served us well in the past, helping us identify threats and opportunities in our environment. In the modern world of data analysis, however, it can lead us astray. We can become so fixated on creating a compelling story that we miss the nuances of the data.

I recently came across a quote that perfectly illustrates our human tendency to favor narrative over factual accuracy: "When the legend becomes fact, print the legend." Ironically, the line is often attributed to the movie "The Man Who Shot Liberty Valance," even though it is not spoken in the movie. Still, the message is important: A newspaper editor decides to publish a fictionalized account of a gunfight, choosing the legend of a lone hero over the less dramatic truth.

Views that contradict our initial assumptions may not even register, and this is a risk of overfitting data to the story we want to tell. For example, measuring individual performance can be tricky, not only because of some legal restrictions, but also because such calculations become less reliable when dealing with small teams. In other words, the law of small numbers makes it harder to spot trends.

Often, people mistakenly believe that small samples accurately represent the entire population because they have a natural tendency to look for narratives in even unrelated data points. This leads them to blindly calculate the average without considering the role of variability and chance. As a result, smaller samples are more likely to show extreme values.

This is consistent with the Central Limit Theorem, which states that as the sample size increases, the distribution of the sample mean approaches the population mean. In simpler terms, with a larger data set, we are less likely to be misled by random fluctuations and get a clearer picture of the underlying reality.

Sample size is important in a variety of scenarios. Consider reviewing resumes for a new position. A small applicant pool (limited sample size) could be skewed by a single exceptional or weak candidate. Conversely, a larger pool (increased sample size) provides a more accurate picture of the average candidate's qualifications. Similarly, the initial open rate of a new sales email campaign with a small sample size (few emails sent) could be misleading. As you send it to a larger audience (increased sample size), the open rate normalizes and provides a more reliable measure of recipient interest.

Using a sufficiently large sample size is critical to obtaining statistically sound and reliable analyses. This approach allows for data-driven decisions by reducing the risk of biases such as survivorship bias, where only successes are considered. In short, a strong data foundation is essential for developing a balanced view and making informed decisions. Now let us explain survivorship bias (or survivor bias, as it is more commonly known).

Under the tip of the iceberg

The quote "Amateurs worry about what they see. Professionals worry about what they can't see," shared by Shane Parrish on his blog, perfectly captures the essence of Abraham Wald's realization during World War II. Wald, a mathematician, was analyzing data on damaged British bombers returning from missions over Germany. He noticed the patterns of damage and realized that they reflected only the areas that allowed the planes to fly back. This led him to suggest strengthening the undamaged areas, which would be critical for the bombers that hadn't yet returned (and therefore weren't part of the data).

Wald's analysis of the bomber data also serves as a cautionary tale for modern data analysis. It's important to recognize that it often represents the "survivors" - the products, people, or strategies that have survived a selective screening process. To mitigate this, we must actively seek out what is missing from the data, such as initiatives that were discarded early or candidates that were not selected.

In recruiting, we often focus on the wins - the rockstars we bring on board. But there's a whole other side to the story, the positions that never get filled, the no-hire requisitions. Kelsie Telson Teodoro wrote a great post about this on the DataPeople blog. It turns out that a whopping 31% of job requisitions never resulted in a hire. That's a lot of wasted resources and a drag on the efficiency of your recruiting machine. Here's the kicker: If you only look at "wins," you'll get a distorted picture of how long it really takes to fill jobs. You might say: Bad data, bad decisions. By excluding these no-hire requisitions, metrics like time-to-close are skewed, leading to inaccurate performance assessments.

Analyzing no-hire requisitions also reveals potential blind spots in your process, enabling data-driven adjustments that save time and resources. Continuously measuring results at each stage of the hiring process helps identify specific areas for improvement. For example, distinguishing between "time to fill" (which includes only successful hires) and "time to close" (which includes both successful and unsuccessful requisitions) provides a more accurate measure of recruiting efforts. Requisitions that do not result in a hire typically have a longer time to close, impacting overall recruiting timelines. Including them in your analysis helps you better forecast and allocate resources, ensuring a more realistic understanding of your recruiting processes.

Therefore, it is equally important to analyze the profiles of candidates who were not hired to understand the full spectrum of your hiring process. This approach can reveal hidden patterns and opportunities for improvement, ultimately leading to a more effective and fair recruitment strategy. Clear and well-calibrated job postings attract a diverse and qualified candidate pool, ensuring that the right candidates apply.

Before sunset, after sunrise

Traditionally, KPIs have often been viewed as lagging indicators - metrics that reflect past performance, such as "time to hire" (the average number of days it takes to fill a vacancy). While valuable for understanding historical trends, lagging indicators provide little insight into current effectiveness or future improvement. Focusing on them alone is like driving by looking only in the rearview mirror - you may be able to avoid past accidents, but you can't anticipate upcoming hazards or optimize your route.

A more comprehensive approach uses both categories, so that by analyzing both, we get a complete picture. Lagging indicators tell us where we've been, while leading indicators help us identify potential roadblocks and inform proactive changes to optimize processes. This combined approach enables data-driven decisions to improve overall performance.

Leading indicators work in a similar way to forward-looking financial metrics, such as sales pipeline growth or customer churn. These metrics provide valuable insight into potential future performance. For example, a recruiting team might monitor application volume or website traffic as leading indicators. A significant decline in these metrics could signal a potential decline in qualified candidates (lagging indicator). This foresight allows for proactive adjustments, such as revamping the career page or launching targeted marketing campaigns, to mitigate any negative impact on future hires.

Lagging indicators, such as time-to-hire or cost-per-hire, provide a historical perspective on past performance. Leading indicators, on the other hand, serve as a critical complement, highlighting potential roadblocks or opportunities for optimization. By analyzing both together, we gain a holistic understanding of the recruiting process. This enables data-driven decision making, proactive adjustments, and ultimately improved overall recruiting efficiency.

Racing a blinkered horse

Data, in all its forms, has become an undeniable force in the modern world. We love the insights it provides, but it's important to remember that data is a tool, not a magic solution. As powerful as numbers can be, they don't always tell the whole story. Sometimes the data contradicts your experience or intuition, and that's a perfectly valid situation. A truly comprehensive approach recognizes the limitations of data analysis and embraces a balanced perspective.

There's always the possibility of errors in data collection, analysis, or interpretation. In addition, data can reflect the way it was collected, potentially leading to biased results. For example, poorly designed surveys or misleading questions can produce misleading data. Logical fallacies can also cloud the interpretation of data. Correlation does not necessarily imply causation - just because two things seem to happen together doesn't mean that one causes the other. Recognizing these potential pitfalls is essential to drawing sound conclusions from data.

A well-rounded approach to decision making goes beyond raw data. Consider incorporating user feedback in addition to quantitative analysis. Understanding user needs and pain points can provide valuable qualitative insights that complement the data. Your own expertise also plays a critical role. Your experience and judgment can help bridge the gap between data and real-world application. Understanding the broader business landscape, including market trends and corporate culture, further enriches the decision-making process.

Important approach, outlined in "I'm Not a Numbers Person" is that "there is an important distinction to be made between being data-informed (which is what we want to be) and being data-driven (what we do not want to be). Being data-driven is like a horse wearing blinkers in a horse race – they can see the finish line and the goal, but they can’t see what is going on either side of them. They race towards the finish line, with minimal distractions, and a limited understanding of what other horses and riders are doing. Data-driven organizations are ruthless around the numbers."

Being truly data-driven means using the numbers to guide your path, but not blindly following them. It's about taking into account the people you work with, current market trends, and even your company's culture. It's about using data as a tool, not a crutch, to make the best possible decisions. By combining quantitative data with real-world insights, you can make well-rounded decisions that are both insightful and actionable. This balanced approach enables you to navigate the complexities of today's world, using data as a tool for informed and strategic decision-making.

Turning oil into sand

As we emerge from the data jungle, we find the path forward illuminated not by a single glow, but by a harmonious interplay of light sources. Quantitative data serves as a powerful flashlight, illuminating a bright path ahead. But to navigate the complex terrain and hidden obstacles, we must use the complementary glow of qualitative insights, professional expertise, and a comprehensive understanding of the broader business landscape.

The journey through the data wilderness is not a destination, but an ongoing exploration. As we venture further and hone our analytical skills, we will inevitably encounter new challenges and uncover previously unseen patterns. This ongoing exploration requires a spirit of critical inquiry, a willingness to challenge existing assumptions, and an openness to diverse perspectives.

Data in all its forms is a powerful tool. But ultimately, it's a tool wielded by people. By taking a balanced approach and continually honing our critical thinking skills, we can transform raw information into actionable insights that drive strategic decision-making. Let us continue to explore, adapt, and unlock the true potential of data to light the way to a future of informed and impactful decisions.

Tim O'Reilly famously compared data to sand - abundant but requiring processing to unlock its true value. He emphasizes analyzing large data sets, not individual pieces, like sifting valuable grains. The same data can be reused endlessly, unlike oil's single-use nature. Just as we've applied critical thinking and diverse perspectives throughout our data exploration, these skills are crucial for transforming raw data into a powerful tool for informed decision-making.

-- Michael Talarek

This is part 1 of a larger article consisting of 4 parts. More to come soon. In the meantime, if you want to learn more about reading data, be sure to check out my ebook "Excelling at Dashboards with Google Spreadsheets".

Evolving in the Data Jungle

Organizations often prioritize productivity and efficiency, relying heavily on metrics and performance indicators. However, this focus on quantitative measures can sometimes obscure the broader context, leading to unintended consequences and skewed results. This article examines the nuances of measuring productivity, the dangers of relying too…

References:

Carl Allchin, “Communicating with Data: Making Your Case with Data”

Elizabeth Matsui and Roger Peng, “The Art of Data Science A Guide for Anyone Who Works with Data”

Tim Harford, “The Data Detective: Ten Easy Rules to Make Sense of Statistics”

Daniel Kahneman, “Thinking, Fast and Slow”

Selena Fisk, ”I'm not a numbers person: How to make good decisions in a data-rich world”

Tim O’Reilly, Data is the New Sand | https://www.theinformation.com/articles/data-is-the-new-sand

Shane Parrish, Turning Pro: The Difference Between Amateurs and Professionals | https://fs.blog/amateurs-professionals/

Eric Van Vulpen, The Basic principles of People Analytics | https://www.aihr.com/resources/The_Basic_principles_of_People_Analytics.pdf

Kelsie Telson Teodoro, Using No-Hire Requisitions for Data-Driven Insights | https://datapeople.io/article/using-no-hire-requisitions-for-data-driven-insights/

People Analytics course at Wharton University (Coursera) | https://www.coursera.org/learn/wharton-people-analytics